- HOW TO INSTALL APACHE SPARK IIN MY MACHINE HOW TO

- HOW TO INSTALL APACHE SPARK IIN MY MACHINE SOFTWARE

- HOW TO INSTALL APACHE SPARK IIN MY MACHINE DOWNLOAD

Setting the PATH variable will locate the Spark executables in the location /usr/local/spark/bin.

We add the above line ~/.bashrc file and save it. Let’s setup the environment variable for Apache Spark - $ source ~/.bashrcĮxport PATH = $PATH: /usr/local/spark/bin # mv spark-1.6.1-bin-hadoop2.6 /usr/local/spark Move the spark downloaded files from the downloads folder to your local system where you plan to run your spark applications. (In this spark tutorial, we are using spark-1.3.1-bin-hadoop2.6 version) $ tar xvf spark-1.6.1-bin-hadoop2.6.tgz To install spark, extract the tar file using the following command:

HOW TO INSTALL APACHE SPARK IIN MY MACHINE DOWNLOAD

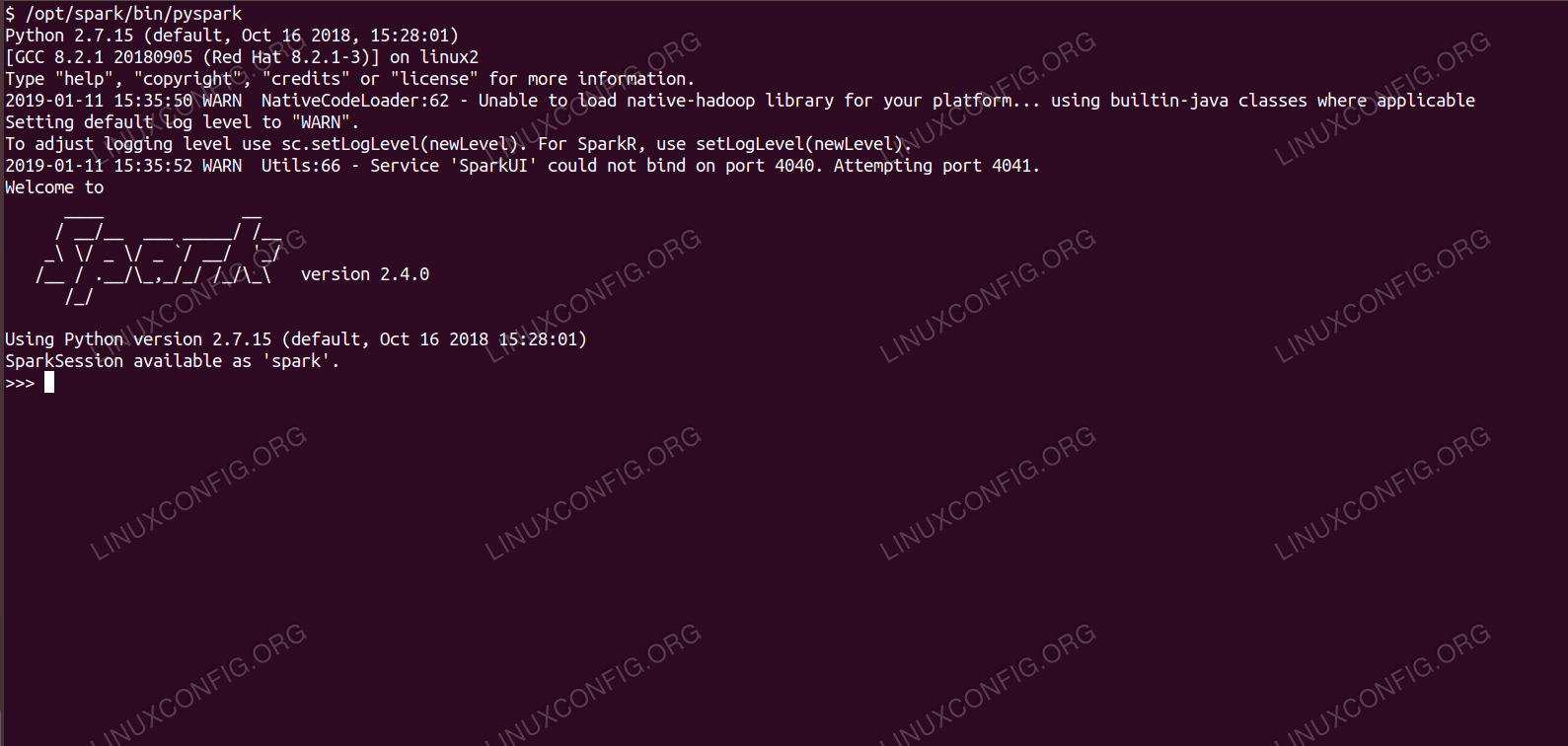

Step 3: Download and Install Apache Spark:ĭownload the latest version of Apache Spark (Pre-built according to your Hadoop version) from this link: Apache Spark Download LinkĬheck the presence of. Let’s install Scala first - $sudo apt-get install scala In the above screenshot, Scala programming language is not installed on my system. In case Scala is already installed on your system, it will display the version details. Learn Hadoop by working on interesting Big Data and Hadoop Projects Use the following command to check if Scala is installed - $scala -version Step 2 – Verify if Spark is installedĪs Apache Spark is used through Scala programming language, Scala should be installed to proceed with installing spark cluster in Standalone mode. Follows the steps listed under “Install Java” section of the Hadoop Tutorial to proceed with the Installation. In case Java is not installed then head on to our Hadoop Tutorial for Installation.

The above screenshot shows the version details of the Java installed on the machine. Use the following command to verify if Java is installed - $java -version

HOW TO INSTALL APACHE SPARK IIN MY MACHINE SOFTWARE

Java is a pre-requisite software for running Spark Applications. Getting Started with Apache Spark Standalone Mode of Deployment Step 1: Verify if Java is installed This is an add-on to the standalone deployment where Spark jobs can be launched by the user and they can use the spark shell without any administrative access. It integrates Spark on top Hadoop stack that is already present on the system. For computations, Spark and MapReduce run in parallel for the Spark jobs submitted to the cluster.Īpache Spark runs on Mesos or YARN (Yet another Resource Navigator, one of the key features in the second-generation Hadoop) without any root-access or pre-installation.

Spark is deployed on the top of Hadoop Distributed File System (HDFS).

HOW TO INSTALL APACHE SPARK IIN MY MACHINE HOW TO

Pre-requisites to Getting Started with this Apache Spark Tutorialīefore you get a hands-on experience on how to run your first spark program, you should have.

0 kommentar(er)

0 kommentar(er)